Teaching in the Artificial Intelligence Age of ChatGPT

View recording on Panopto (restricted to MIT community).

Key Takeaways

- Bruff argues that generative AI tools are here to stay, and while there is debate on the acceptable use of these tools, we should expect that students will use them. Therefore, educators need to teach students how AI works and how to evaluate its accuracy.

- This new reality will require revising some learning objectives and updating assignments appropriately. Institutions will also need to update their academic integrity norms and policies.

- AI tools can help students focus on the more meaningful and relevant aspects of problem-solving rather than time-consuming and error-prone processes.

On Wednesday, March 22, 2023, we hosted Dr. Derek Bruff to discuss the landscape of AI tools for generating text and other media and the teaching choices they present.

Dr. Bruff began his talk by comparing ChatGPT to the iconic character from the 80s sitcom Cheers, Cliff Clavin, the mailman who spoke authoritatively on a slew of topics he may have known something about but who often embellished facts to make himself look smart in front of his friends. ChatGPT and similar tools will also “hallucinate” facts, the term attributed to this phenomenon. And Bruff explains that’s because there is a difference between asking a generative AI tool to answer a question and asking it to generate natural language as if a human was answering. Nonetheless, when used intentionally, Bruff argues, AI tools can augment and enhance student learning.

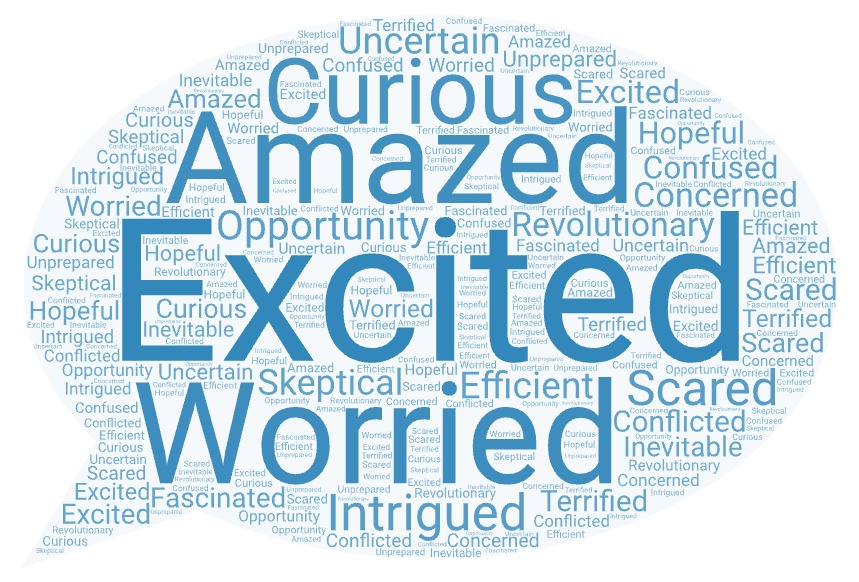

Since the launch of generative AI models like ChatGPT, the debate has run from sheer panic and calls for an outright ban to cautious optimism and declarations about the dawn of an “AI-powered golden age.” Bruff argued that these tools are not going away, and students will use them (for better or worse). Generative AI will be in search engines, Microsoft Office and Google products, and a host of other applications. While the discourse on their appropriate use is complicated, we need to engage in that discourse in order to advance teaching and learning in this new age of artificial intelligence.

Six Things to Know About AI Tools and Teaching

Dr. Bruff framed his talk around the following six things to know about AI tools and teaching.

We are going to have to start teaching our students how AI generation tools work.

AI will be (is) readily available to students and will increasingly be used in professional settings, therefore Bruff recommends that we start teaching students to optimize its use. For instance, instructors can ask students to use AI to query a topic they already know about, like a hobby or a sport they follow, and then ask them to evaluate the accuracy of the response. This task can help students to identify errors, hallucinated facts, and fake sources that these tools will inevitably produce. In the case of a writing class, instructors can use AI to generate an essay for students to analyze; prompting students to consider the strengths and weaknesses of the product and how to subsequently improve upon it.

Bruff offered an example of an assignment created by Prof. Ryan Cordell of the University of Illinois Urbana-Champaign for his course, Amanuenses to AI in Building a (Better) Book: “In tandem with a large language model, produce a short poem or ‘literary’ passage that you find interesting. Whether ‘interesting’ means you find it funny, or moving, or surprising, or weird, or disturbing, or otherwise is up to you. But your text should:

- Be a hybrid production: some combination of your prompting and an LLM’s output.

- Also, as we work toward that practical goal, however, our next goal is to begin probing the boundaries and assumptions of LLMs through comparison of different inputs and outputs.”

Cordell requires that students explore a variety of LLMs asking students to document their pros and cons and evaluate their strengths and limitations. (Cordell, 2023).

We might need to change our learning objectives because of AI generation tools.

Citing a recent blog from John Warner, author of Why They Can’t Write: Killing the Five-Paragraph Essay and Other Necessities (2018), Bruff stated that part of the problem is that many educators have been “conditioned to reward surface level competence, like fluent prose, with a decent grade.” Instead, we will need to restructure learning objectives to emphasize the importance of each step in the process (ideation and brainstorming, outlining, identifying sources, drafting and revising, etc.) rather than the finished product. Bruff offered an example of using Wolfram Alpha in his linear algebra class. He persuasively argued that by allowing students to use the software to row-reduce large matrices (after ensuring that students engage in and grapple with the row-reduction process “by hand”), students can model and solve more interesting, challenging, and authentic problems. i.e., the use of the software allows students to focus on the more meaningful and relevant aspects of problems and problem solving, rather than the time-consuming and error-prone row-reduction process.

Another example he presented was the “less content, more application” challenge proposed by Inara Scott at Oregon State University. To illustrate, she presented a teaching case in the context of how a business might use AI to address the implementation of sustainable practices: “Thanks to ChatGPT, this business owner doesn’t need an employee to create a generic list of sustainability strategies—ChatGPT can give it to them in about 3 seconds. What ChatGPT can’t do is apply those strategies to the business, or explain their strategic suggestions in emotional and meaningful ways.” (Scott, 2023, emphasis added).

We might keep some learning objectives, even though AI can shortcut the work.

There will be circumstances, Bruff posited, when generative AI is more efficient but counterproductive to learning. For instance, when students are in the initial phase of skill acquisition, you would want them to refrain from engaging with a tool that would circumvent their learning. To illustrate this, Bruff, a self-described bird watcher, uses a popular app called Merlin, which helps bird enthusiasts identify birds they see by song and appearance. While Merlin is a wonderful tool for hobbyists, for someone studying to become an ornithologist, using that app would interfere with their ability to develop the skills necessary to identify species accurately and collect data with scientific confidence. In such cases, Bruff emphasized the importance of transparency – going over the learning objectives with your students to explain why using generative AI in this circumstance will thwart their learning and leave them lacking critical competencies they will need for their professional lives.

This will mean updating (some) assignments so that ChatGPT is disallowed or not useful.

Given that AI tools are already ubiquitous, students will use them even if you try to ban them. Resistance is futile. Search engines like Bing and Google already incorporate AI into their platforms, and trying to restrict the use of generative AI will invite an undesirable amount of academic integrity issues. The few so-called ChatGPT “detectors” have proven unreliable, producing false positives and negatives. And there is concern about inequities for underrepresented minority students (URMs) who may be incorrectly flagged for plagiarism at higher rates, and therefore more likely to fall victim to the false positives of detection software. (Stewart 2013). Finally, students will most likely be expected to use them in their professions – so preparing them to be critical and efficient users may be an important new teaching goal.

So it seems that the outright banning of AI tools is not the answer. Instead, Bruff recommends creating assignments that will make AI less useful.* For example:

- Develop assignments related to the readings from the current week.

- When possible, leverage very recent current events since the tools only have access to information up to the point of their last updates.

- When relevant, ask students to incorporate their own experiences or professional goals.

- Create well-scaffolded assessments where each assignment builds on previous student work.

- Consider using multimedia (not entirely text-based) projects.

Arranging fragments together into meaningful shapes is the most human thing of all.Claire Evans of the band Yacht describing how they felt about using AI to create their 2020 album, Chain Tripping.

AI tools can enhance student learning, even toward traditional goals.

They can help students think more deeply about the purpose of their writing. AI tools can be leveraged to help students develop more nuanced skills and become better writers. Robert Cummings, Chair and Associate Professor of Writing and Rhetoric at the University of Mississippi and a colleague of Bruff’s, recently wrote that “professors can expect students to use ChatGPT to produce first drafts that warrant review for accuracy, voice, audience, and integration of the purpose of the writing project.” Incorporating this “review and refinement” step into student assignments can help students to see the limits of generative AI and to develop key self-editing skills.

They can help students generate ideas. These tools can be especially helpful for students who find it difficult to get “past that blank page” at the start of a writing assignment by providing them with a point of departure for their writing. Bruff shared a Reddit post from a student with ADHD, who said a tool like ChatGPT is like having a personal tutor that he can “ask for clarification and get a deeper understanding of concepts.” In another example from a paper published by Paul Fyfe in 2022, one student noticing the similarity between his writing and the predicted text, noted that it was “like reading potential sentences that I would have written in another timeline, like glancing into multiple futures.”

AI research tools can help students go in new directions. Elicit is a research assistant tool that can perform literature reviews and find relevant research papers on topics without needing a perfect keyword match. Students can use this tool to help them identify resources and references that would otherwise be difficult to find.

We will need to update our academic integrity norms and policies

Bruff concluded his presentation by urging academic communities to (re)consider their norms, expectations, and policies around academic integrity and plagiarism. He asked the audience to consider the following questions:

- Is ChatGPT a “someone else” whose words can be plagiarized?

- If using ChatGPT constitutes unauthorized aid, what about a less generative tool like Grammarly? Or Microsoft Word’s spellchecker?

- If you require students to cite their use of ChatGPT, how does one cite an AI text-generation tool?

- How can we communicate new academic integrity norms to students?

- What unit policies can we make that respect the different roles these tools might play in different courses?

Finally, in a recent post on her Learning, Teaching, and Leadership blog, Sara Eaton at the University of Calgary offers “6 tenets” for postplagiarism writing. In the sixth tenet, she concludes: “Historical definitions of plagiarism will not be rewritten because of artificial intelligence; they will be transcended. Policy definitions can – and must – adapt.” (Eaton, 2023).

If you are interested in

- rethinking your real goals for student learning,

- redesigning your assignments and assessments (and possibly the way you teach) to better support your revised goals,

- leveraging the utility of generative AI to create more meaningful assignments and more authentic learning experiences,

Please contact us (TLL@mit.edu) with your suggestions, questions, and ideas. What are your strategies for engaging with this new reality? We are happy to collaborate with you on the development of effective approaches and to share ideas with the MIT community.

About the Speaker

Derek Bruff is an educator, author, and higher ed consultant. He directed the Vanderbilt University Center for Teaching for more than a decade, where he helped faculty and other instructors develop foundational teaching skills and explore new ideas in teaching. Bruff regularly consults with faculty and administrators across higher education on issues of teaching, learning, and faculty development. Bruff has written two books, Intentional Tech: Principles to Guide the Use of Educational Technology in College Teaching (West Virginia University Press, 2019) and Teaching with Classroom Response Systems: Creating Active Learning Environments (Jossey-Bass, 2009). He writes a weekly newsletter called Intentional Teaching and produces the Intentional Teaching podcast. Bruff has a Ph.D. in mathematics and has taught math courses at Vanderbilt and Harvard University.

Below is a list of AI tools referenced in Derek Bruff’s presentation

| Application | Features | Availability |

|---|---|---|

| Bing | AI-powered search engine developed by Microsoft. | Free to use. Must have the latest version of Microsoft Edge to access all features. |

| Bing Image Creator | Microsoft’s image creator, powered by Dall-E. | Free to use in most browsers. |

| ChatGPT | Open AI’s large language model (LLM) chatbot released in November 2022. | Basic Chat-GPT 3 is free. Subscription-based ‘Plus’ version is available for $20/month. |

| Dall-E 2 | Open AI’s image generator can create original, realistic images and art from a text description. | Works on a system of free and purchased ‘credits.’ |

| Elicit | AI research assistant that can find relevant papers, summarize takeaways and extract key information. It can also help with brainstorming, summarization, and text classification. | Free to use with account. |

| Explainpaper | Explainpaper is an AI-powered tool designed to help readers better understand academic papers. It allows readers to upload a paper and then highlight any confusing text they come across. | Free to use with account. ‘Plus’ ($12/month) and ‘Premium’ ($20/month) versions are available. |

| Fermat | Fermat is AI software designed to help with creativity, brainstorming, conceptual art, filmmaking, and writing. | Free to use with account. Paid subscription ‘Team’ and ‘Enterprise’ versions coming soon. |

| GPT-J | Similar to Chat GPT but is free and open source. | A free and open-source version of GPT-3. |

| Moonbeam | AI writing assistant specifically for writing essays, stories, articles, blogs, and other long-form content. | A seven-day trial for $1.00, then $49/month. |

| NovelAI | NovelAI is a monthly subscription service for AI-assisted authorship, storytelling, virtual companionship, or simply a GPT-powered sandbox for your imagination. | Free trial, and then paid subscriptions with three levels: $10, $15, $25/month. |

| Open AI Playground | Open AI Playground is a free online platform that enables users to explore, create and share AI projects in a web-based sandbox environment. | Free to use with account. |

| Otter | Otter uses AI to write automatic meeting notes with real-time transcription, recorded audio, automated slide capture, and meeting summaries. | Free, limited “basic” account for individuals, subscription-based “pro,” “business,” and “enterprise” plans. |

| Scispace | SciSpace is an AI-driven platform for exploring and understanding research papers. It also includes a citation generator, paraphrase, and AI detector. | Free to use with account. |

| YouChat | YouChat is an AI-powered search engine. | Free to use with account. |

References

Cordell, R. (2023). Lab 1: Amanuenses to AI. Building a (Better) Book. https://s23bbb.ryancordell.org/labs/Lab1-AI/

D’Agostino, S. (2023). ChatGPT sparks debate on how to design student assignments now. Inside Higher Ed. https://www.insidehighered.com/news/2023/01/31/chatgpt-sparks-debate-how-design-student-assignments-now

Fyfe, P. K. (2022). How to cheat on your final paper: Assigning AI for student writing. AI & Society. https://doi.org/10.1007/s00146-022-01397-z

Eaton, S.E. (2023, February 25). 6 Tenets of Postplagiarism: Writing in the Age of Artificial Intelligence. Learning, Teaching and Leadership. https://drsaraheaton.wordpress.com/2023/02/25/6-tenets-of-postplagiarism-writing-in-the-age-of-artificial-intelligence/

Scott, I. (2023, July 23). The “Less Content, More Application” Challenge. www.linkedin.com. https://www.linkedin.com/pulse/less-content-more-application-challenge-inara-scott/

Stewart, D.-L. (2013). Racially minoritized students at U.S. Four-year institutions. The Journal of Negro Education, 82(2), 184. https://doi.org/10.7709/jnegroeducation.82.2.0184

Warner, J. (2022, December 11). ChatGPT Can’t Kill Anything Worth Preserving. The Biblioracle Recommends. https://biblioracle.substack.com/p/chatgpt-cant-kill-anything-worth